Introducing Studio 3T’s Query Profiler

Want to find and investigate all the slow queries on your MongoDB databases? Try the Query Profiler where you can profile databases to quickly find out how long queries took to run, when queries ran, and how often queries ran. Analyze slow queries using the visual explain plan tool to check for missing indexes, and view the MongoDB query code. Get the Query Profiler to open the query in your preferred Studio 3T editor, ready for debugging MongoDB performance issues.

Try Studio 3T for freeWhat is the MongoDB Database Profiler?

The MongoDB Database Profiler captures and logs data about operations, for example:

- operation type, such as find and aggregate queries, updates, inserts, deletes, or administration commands

- execution time

- namespace (a combination of the database name and collection name)

- query code (the query document)

The Query Profiler uses this data to identify slow queries, so that you can optimize MongoDB performance by improving query code, and adding indexes or changing the fields in existing indexes.

The MongoDB Database Profiler stores the data in the system.profile collection. You can view the system.profile data in Studio 3T, just like any other MongoDB collection, but it’s not easy to extract collection, filter, and option data for find and aggregate queries in a meaningful way. Studio 3T’s Query Profiler extracts and analyzes the profiling data so that you can understand why your queries are running slowly and shows you the query code for find and aggregation queries so that you can fix them and improve MongoDB performance.

Opening the Query Profiler

To open the Query Profiler and start monitoring MongoDB performance:

- Global Toolbar – Locate the database you want to profile in the connection tree and click the Query Profiler button

- Right-click – In the connection tree, right-click the database you want to profile, and then select Query Profiler.

- Right-click – In the connection tree, right-click a collection, and then select Query Profiler. This does not mean that you’ll be profiling data at collection level. You’ll be working with the entire database.

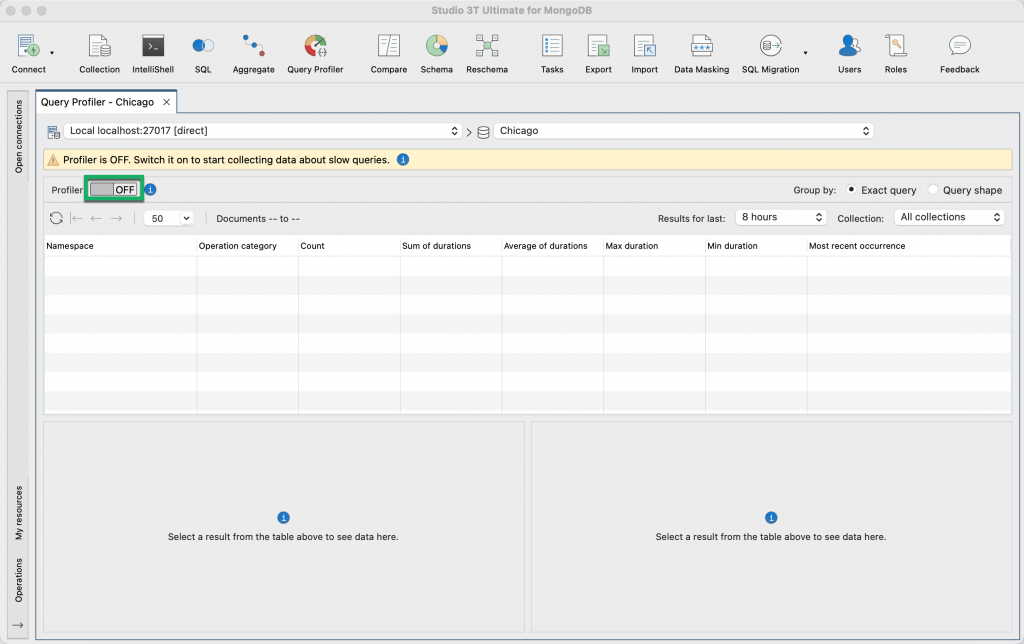

The Query Profiler opens in a new tab. By default, the profiler is switched off. To switch it on and start profiling MongoDB queries, click the Profiler switch.

Using the Profiler switch enables the MongoDB Database Profiler and sets the profiling level to 1.

The profiling levels are as follows:

- Level 0 – The Profiler is off and is not collecting data. You can continue to work with the profiling data that has already been collected.

- Level 1 – The Profiler collects data for find and aggregation queries that take longer than 100 milliseconds.

- Level 2 – The Profiler collects data for all operations. You can’t set the profiling level to 2 in the Query Profiler but you can use IntelliShell or the mongo shell to do this.

The MongoDB Database Profiler logs slow operations with:

- a slow operation threshold of 100 milliseconds

- a filter for find queries and aggregation queries

The Query Profiler does not allow you to change the profiling level, for information about how to do this in mongosh, see Configuring the MongoDB profiler.

Switching off the Query Profiler

Remember to switch off the profiler when you’re not monitoring MongoDB database performance to prevent unnecessary overhead, especially in production environments.

When the profiler is switched off, you’ll still be able to view the profiling data that has already been collected.

To switch off the profiler, click the Profiler switch.

Viewing slow queries in MongoDB

The Query Profiler displays the slow queries identified by the MongoDB Database Profiler.

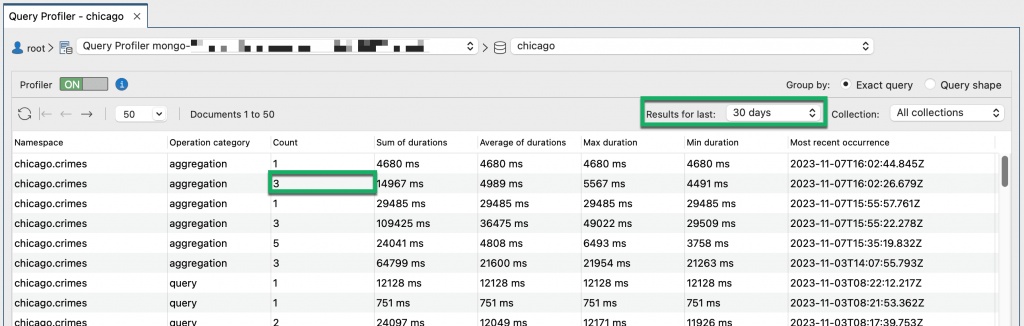

By default, the items in the list are sorted by Most recent occurrence, so if you’re investigating MongoDB performance issues in real-time on a live database, the slow queries that you need to troubleshoot are shown at the top of the list.

Count shows how often the query ran for the timeframe that is selected in the drop-down list. In the screenshot above, the query ran 3 times in the last 30 days.

You can group the slow queries by Exact query or Query shape. We’ll use the simple queries below to explain these groupings:

db.customers.find({"firstname": "John"})

db.customers.find({"firstname": "Mark"})

db.customers.find({"firstname": "Steve"})Exact query displays unique queries based on field names and field values. In the simple queries above, the queries have different values, so the Query Profiler interprets them as different queries and does not group them.

Query shape displays groups of queries with the same logical structure but ignoring field values. In the simple queries above, MongoDB maintains a single query shape for each of the 3 queries, for example db.customers.find({"firstname": <String>}), and generates a hash for the query shape. MongoDB writes the hash values to the queryHash field in the system.profile collection. In the Query Profiler, you can see the hash value in the Query hash column when you group by Query shape.

When you group by Exact query, you can see if a query is a find query or an aggregation query in the Operation category column.

To filter the list:

- for a particular collection in the database, select the Collection from the list

- by when the slow queries ran, select a timeframe from the Results for last list. When Query Profiler does not display any slow queries, you may find that going further back in time and selecting a broader timeframe retrieves profiling data.

Drilling down and interpreting the profiling results

You can drill down and see the profiling data for each slow query in a group. So if a query ran 6 times, as indicated by a value of 6 in the Count field in the top panel, the bottom left section of the Query Profiler displays 6 items.

You can see how long each query took to run and when each query ran by looking at the Duration and Timestamp fields.

Keys Examined shows the number of index entries that have been scanned. If Keys Examined is zero, select the query and click the View Full JSON button to check the planSummary key value. If the planSummary value is COLLSCAN, MongoDB has performed a full collection scan and this confirms you need to create indexes on your collection to improve MongoDB performance. Or, a faster way of checking index usage is to click the Explain plan tab to see if MongoDB performed a collection scan or an index scan.

Viewing the full JSON format

The Query Profiler displays only the most useful profiling data captured by the MongoDB Database Profiler, so that you can see potential performance problems at a glance. If you need to investigate further, you can view all the profiling data that is stored in system.profile for a query, by clicking the View Full JSON button.

Query Code

The Query Code tab shows the query in mongo shell language and it’s from here that you can debug and fix the slow query.

You can debug the code in Intellishell, by clicking Open in IntelliShell.

For find queries, you can debug the query in the Collection Tab, by clicking Open in Collection Tab.

For aggregation queries, you can debug the query in the Aggregation Editor, by clicking Open in Aggregation Editor. The Aggregation Editor makes it easier to troubleshoot the slow query by showing you the input data and output data for each stage in turn.

Explain plan

Explain plan is a visual representation of the performance of a MongoDB find query. It shows the query plan and the steps MongoDB took to run a query, with runtime statistics for each step.

Explain plan is useful for checking if a query is using an index. If it is not using one because MongoDB has performed a collection scan, and you want to create an index, locate the collection in the connection tree, right-click, and select Add Index. To find out more, see Adding an index.

If the query is already using an index, check that the query is filtering as much data as possible first of all. For a detailed explanation, see Optimize queries by applying relevant filters.

How to Use the MongoDB Profiler and explain() to Find Slow Queries (Tutorial)

When dealing with performance issues in any type of database, it usually pays to take a simple approach and deal with the queries that are causing the most problems first.

In this tutorial, you’ll learn precisely:

- how to find slow-performing, cost-intensive queries

- glean important statistics like how long a MongoDB query takes to run, when the query is being executed, how often, and why

What is the MongoDB profiler?

You might think that you’ve told the database what to do, but any query language actually only specifies the result you want and the set-based operation you require.

The database system decides how to do it and what physical operations to perform.

MongoDB comes with a built-in profiler that gives query-level insight as to why the database chose these operations.

The MongoDB profiler allows you to see what queries are being run, either by collecting all of them or sampling time-slices of them.

Generally, you’ll leave the profiler switched off unless you are collecting information or monitoring the usage of the database, because it has to store the information it collects.

When it collects queries, it does so from the entire instance, which means all your databases and connections on that instance. It can be turned on or off from applications or from the shell.

Configuring the MongoDB profiler

The profiler stores the queries in db.system.profile. This can be queried just like any other database, so you can find a lot of information quickly.

As well as the source of the query or command, there is a summary of the execution stats that tell you, amongst other information:

- when the query was run

- how fast it ran

- how many documents were examined

- the type of plan it used

- whether it was able to fully-use and index

- what sort of locking happened

This will take you a long way down the route of diagnosing performance problems.

You can check your current profiling status using the command:

db.getProfilingStatus()

We can then change the status of the MongoDB profiler according to what we want it to do.

- Level 0 – The profiler is off and does not collect any data. This is the default profiler level.

- Level 1 – The profiler collects data for operations that take longer than the value of

slowms, which you can set. - Level 2 – The profiler collects data for all operations.

We’ll start by setting it to Level 2.

Using the mongo shell or a GUI-integrated shell like Studio 3T‘s IntelliShell, run the following command:

db.setProfilingLevel(2)

which, in my case, returns:

‘{ "was" : 0, "slowms" : 100, sampleRate: 1, "ok" : 1 }’

This tells me that it was successful and the level was 0 (off). It also tells me that I’ve set the threshold for level 1 use at 100 Ms so that only those queries that take longer than that are recorded if I switch to level 1.

We can then confirm that it is now at level 2 by executing the db.getProfilingStatus() command.

Useful queries for MongoDB performance tuning

Let’s start by collecting all our queries, formatting them nicely.

Sort queries by when they were recorded, showing just commands

db.system.profile.find({

"command.pipeline": { $exists: true }

}, {

"command.pipeline":1

}).sort({$natural:-1}).pretty();

I’ve added the ‘pretty()’ method so as to make it easier to see the code of the command.

Normally, you’d want to specify the namespace (“ns”) in the query.

Find all queries doing a COLLSCAN because there is no suitable index

// all queries that do a COLLSCAN

db.system.profile.find({"planSummary":{$eq:"COLLSCAN"},

"op" : {$eq:"query"}}).sort({millis:-1})

Find any query or command doing range or full scans

// any query or command doing range or full scans

db.system.profile.find({"nreturned":{$gt:1}})

Find the source of the top ten slowest queries

We can show the source, nicely formatted, of the top ten slowest queries. This query lists the source of the top slowest queries in the order of slowest first.

db.system.profile.find({"op" : {$eq:"query"}}, {

"command" : NumberInt(1),

"millis": NumberInt(1)

}

).sort({millis:-1}).limit(10).pretty()

Find the source of the top ten slowest aggregations

// the source of the top ten slowest commands/aggregations

db.system.profile.find({"op" : {$eq:"command"}}, {

"command.pipeline" : NumberInt(1),

"millis": NumberInt(1)

}

).sort({millis:-1}).limit(10).pretty()

Find all queries that take more than ten milliseconds, in descending order, displaying both queries and aggregations

//find all queries that take more than ten milliseconds, in descending order,

displaying both queries and aggregations

db.system.profile.find({"millis":{$gt:10}}) .sort({millis:-1})

For initial development work this sort of query is very useful, particularly because each document stores performance information along with queries.

Probably easier for finding your long-running queries and aggregations is:

//find all queries and aggregations that take more than ten milliseconds, in descending

order, displaying either the query or aggregation

db.system.profile.find({ "millis": { $gt: 10 } }, { millis: NumberInt(1),

"query": NumberInt(1), "command.pipeline": 1 }).sort({ millis: -1 })

Commenting your queries

At some point, you’ll discover that it is a great advantage to comment your queries so you can select the one you want by searching for the comment, and you’ll be able to immediately be able to work out what the long-running query is from the comment.

db.Customers.find({

"Name.Last Name" : "Johnston",

$comment: "Find all Johnstons and display their full names alphabetically"

}, {

"_id" : NumberInt(0),

"Full Name" : NumberInt(1)

}).sort({

"Name.First Name" : NumberInt(1)

})

And then you can find all queries with a particular comment or all the commented queries:

//display all queries with comments

db.system.profile.find({ "command.comment": {$exists: true} }, { command: 1 }).pretty()

It is also possible to put a comment in the $match stage of an aggregation expression:

db.Customers.aggregate(

[{$match : { "Addresses.County": { $eq: "Yorkshire" },

$comment: "This is for the number of notes for Yorkshire customers" }

},

{$group: {_id: "$Name.First Name", NoOfNotes: {$avg: {$size: "$Notes"}}}},

{$sort : {NoOfNotes : -1}},

{$limit:10}])

What makes a MongoDB query slow?

Because we just need to know what the slow queries are, we need to specify what we consider to be slow: meaning the criterion in milliseconds.

You can, of course, store all queries and aggregations, and just select the queries that meet your criteria.

// find anything that took more than 20 ms

db.system.profile.find({"millis":{$gt:20}})

You are likely to find, in a system that is under load, that there is much too much ‘noise’: very rapid queries doing minor housekeeping tasks such as logging usage.

In this case, it is much better to use profiling level 1, which stores only the slow queries.

You may be investigating locking rather than pure performance, or you may be checking for range or full scans.

Sometimes you will have a query that is run very frequently, such as an authentication routine for a web page. It will take a moderate amount of time, but is inefficient.

Here you will want the criterion of a cumulative time over a time period, so you’d need full profiling at level 2. It is, however, often useful to have the option of storing only slow queries.

Here we choose to collect just the data for operations that take longer than the criterion value of slowms. At the same time, we set slowms to 30 ms.

db.setProfilingLevel(1, { slowms: 30 })

There are several resources on the internet that have examples of MongoDB profiler queries:

Using the explain() method to work out why a query is slow

Once you have identified the query that will make the most overall difference once its performance is improved, you need to work out how the query is being executed.

Each database system has a different way of telling you the strategy it is using for a query and how well it has performed. It is usually called ‘Explain’.

In any database system, this strategy is a set of operations that the execution engine needs to perform to return the data required by a query.

The database chooses the winning strategy by judging the relative merit of the alternative strategies. If you haven’t provided the means to enable the query to run quickly with an effective indexing strategy, such as by providing a usable index, it is up to you.

MongoDB provides an ‘explain’ facility that gives you a tool for troubleshooting queries, which comes in three flavors:

db.collection.explain()method- the

cursor.explain()method - the

explaincommand

All three can be used to return information on query plans and the execution statistics of those query plans.

To find out the strategy a query uses, the cursor that you use for queries – usually created in the db.collection.find() method – has an explain() method that will tell you about the query plan.

Instead of a result, the explain() method runs the query optimizer to choose the winning plan for the operation specified in the cursor, runs the query, and returns a document with the query plan that was used describing the execution of the winning plan and, if you specify it, the execution statistics.

The document which outlines the query plan has three main sections:

- queryPlanner – details the plan selected by the query optimizer and, optionally, lists the rejected plans

- executionStats – shows how the winning plan, and optionally the rejected plans, were executed

- serverInfo – tells you about the MongoDB instance that executed the query

You will most often use it like this:

db.collection.find().explain()

You can specify the amount and type of the information you get in the explain output by using the verbose parameters “queryPlanner”, “executionStats”, and “allPlansExecution”.

If you don’t specify anything, the method uses “queryPlanner” verbosity which returns the queryPlanner information for the evaluated method.

If you specify “executionStats”, you get the queryPlanner and executionStats information for the evaluated method, but not the query execution information for the rejected plans.

To get this, you need to ask for “allPlansExecution” mode. You then also get the statistics for the other candidate plans that the query optimizer selected.

There is another way of using explain(), which is to call the method on the collection:

db.collection.explain().find()

By doing this, you can get an explanation of the query plan of aggregations, or in any other circumstances where you are chaining query modifiers.

Here, I’m using the alternative syntax to check the query plan of an aggregation query on our sample Customers database.

I’ve added a simple way of giving an end-to-end timing. This can be:

start = new Date;

db.Customers.explain().aggregate({$project :{"Name.First Name": 1}},

{$group :{_id: "$Name.First Name", count : {$sum: 1}}},

{$sort : {count : -1}},

{$limit:10}

);

print(new Date - start + 'ms')

The execution plan

The first sight of the query plan and the execution stats can cause some initial twinges of alarm, but are fairly easy to read with a brief explanation.

I will need to be slightly more verbose, because without some up-front explanation it will remain slightly baffling.

The explain.queryPlanner values

MongoDB, when faced with a query, needs to determine a reasonable strategy for executing the query.

This is a black art in any good database system, since an execution plan that works well in one context may be grindingly slow in a different context. MongoDB has a query planner that selects an existing cached plan or creates one for the query.

To process a query, the query planner searches the query plan cache to see whether a suitable query plan exists in cache that can be reused.

If there isn’t, then it generates as many alternative plans as it can, based on the nature of the query, the available indexes, and the data distribution.

If there is more than one, it tries each in turn. The winning plan is compiled and placed in cache, so it can be reused.

If a suitable plan already exists, the query planner evaluates it via a ‘replanning’ mechanism.

Based on how well it does, the Planner either keeps or evicts the cache entry, and replaces it with a new plan. The query planner executes the plan and returns the results.

The query plans are represented as a tree of stages, in which each stage passes its results to its parent node.

The leaf nodes create documents by accessing the data within either the collection or the queries’ chosen index. They pass this document data to their parent nodes which then manipulate them.

The root node derives the result set from its child stages and assembles the returned result.

Each stage has a name. The name describes the operation. The most important names are:

- COLLSCAN – Collection scan

- IXSCAN – Scan of data in index keys

- FETCH – Retrieving documents

- SHARD_MERGE – Merging results from shards

- SORT – Explicit sort rather than using index order

There are other undocumented names for stages, such as:

- ‘KEEP_MUTATIONS‘ – where possible, merge results that are invalidated mid-query back into the query results

- ‘LIMIT, AND_SORTED‘ or ‘AND_HASH‘ – part of an intersection plan

- ‘EOF‘ – end of file

- ‘SORT_KEY_GENERATOR’ – extracts a field(s) from a database to be sorted later

A record of all this activity is within the explanation.

explain.queryPlanner.namespace holds the value of the namespace against which the query is run.

- You will know whether an index filter was used from

explain.queryPlanner.indexFilterSet - The winning plan is detailed in explain.queryPlanner.winningPlan as a tree of stages named in

explain.queryPlanner.winningPlan.stage - Each one, unless it is a leaf, will have one

inputStageor severalinputStages - Each stage will only have the relevant details that are appropriate for the type of stage. If any candidate plans were considered, they will be held in

explain.queryPlanner.rejectedPlans

The executionStats values

The executionStats give you the story of how the winning strategy actually performed, as long as you specified it by running the explain in executionStats or allPlansExecution.

If it is describing a write operation, it doesn’t actually perform the operation in explain mode, but merely describes it.

When an execution plan is executed, each stage of the plan is done so in small “work units” which might involve examining a single index key, fetching a single document from the collection, applying a projection to a single document, or doing a piece of internal bookkeeping.

It is a useful indication of the effort that the stage took. Each stage ends up with intermediate results that are returned to its parent stage.

One of the most useful nuggets of information is whether the index used was a covering one. If it has, then the explain result has an IXSCAN stage that hasn’t a got a FETCH branch, and the explain.executionStats.totalDocsExamined is 0.

You can also see whether the index used by the plan could be used to determine the requested sort order, or whether it had to use a SORT stage instead.

If there was a SORT stage that used a key/value in the collection, you can be sure that the index could be improved to allow the plan to sort the result. Was the collation the same? Were the index keys in the same order as the requested sort order?

Here’s some other useful information you can find out from the execution stats:

explain.executionStats.nReturned– tells you how many documents in the collection matchedexplain.executionStats.executionTimeMillis)– tells you how long it tookexplain.executionStats.totalKeysExamined– tells you the number of index entries scannedexplain.executionStats.totalDocsExamined– tells you.the number of documents scanned

The performance of each stage in the execution plan is described in a similar way to the plan, as explain.executionStats.executionStages details the completed execution of the winning plan id held as a tree of stages like the execution plan itself, with only the relevant details.

Each stage consists of execution information specific to the stage, such as the number of intermediate results returned by the stage (explain.executionStats.executionStages.advanced) or the number of work units used (explain.executionStats.executionStages.works).

It will also give information as to how many times it was blocked by another process and had to yield its locks and save its state.

It is useful to know how many documents were scanned during a COLLSCAN or FETCH (explain.executionStats.executionStages.inputStage.docsExamined) and whether it reached the end of the stream (explain.executionStats.executionStages.isEOF)

If you want to see whether the plan involved an index intersection, you’ll see either an AND_SORTED stage or an AND_HASH stage and there will be an inputStages array that details the indexes.

Likewise, if MongoDB uses indexes for an $or expression, the result will include the OR stage with an inputStagesarray that details the indexes.

Conclusions

MongoDB has a very sensible range of information that is available for tuning the performance of queries and aggregations.

When tuning queries, it generally pays to pick off the worst-offending ones first and to tackle the most obvious problems first.

There is a lot of information available on query execution, but whilst it is interesting, most of it is only important once you have tried and failed with the obvious remedies. Only then is it necessary to wade into the more esoteric information such as locking, selectivity or data distribution.

The MongoDB profiler and the explain methods will take you a long way. After all, many problems become pretty obvious just by staring at the source of the query.

FAQs about MongoDB performance tuning with Query Profiler

The impact depends on how heavy the database load is. If the server load is already heavy, using the Profiler switch to enable the MongoDB Database Profiler increases the demand on the server.

When the profiler is switched on it also uses disk space because it has to store the profiling data it has collected.

The MongoDB Database Profiler stores data in the system.profile collection.

In Studio 3T, you can view and query the system.profile collection in a Collection Tab just like any other collection. Simply locate the profiled database in the connection tree, open the System folder, and double-click the system.profile collection.

The Profiler switch in the Query Profiler sets the profiling level to 1 when you switch it on to enable the MongoDB Database Profiler.

The Profiler switch in the Query Profiler sets the profiling level to 0 when you switch it off to disable the MongoDB Database Profiler. No further data is collected but you can still view existing profiling data.

If you want to collect profiling data for all operations, you can set the profiling level to 2 in IntelliShell or in the mongo shell, using the db.setProfilingLevel() method. See the MongoDB documentation, for a full description.

Note that if you modify the db.setProfilingLevel() method and apply a filter so that data is not collected for find queries or aggregation queries, then the Query Profiler cannot display slow queries for those operations.

You can change the profiling threshold in IntelliShell or in the mongo shell, using the slowms setting on the db.setProfilingLevel() method. By default, the slow operation threshold is 100 milliseconds. See the MongoDB documentation, for a full description of the slowms setting.

The Query Profiler won’t display profiling data if your MongoDB user doesn’t have find privileges on the system.profile collection.

You may find that going back further in time and selecting a broader timeframe retrieves profiling data. To do this, use the Results for last drop-down list.

To view profiling information from system.profile for find and aggregation queries, select the database in the Studio 3T connection tree and open the Query Profiler.

In the Query profiler, the most recent slow queries are displayed at the top of the list. You can:

- filter the operations by collection, by selecting the required collection in the Collection drop-down list.

- filter the operations by a particular time range, by selecting a timeframe in the

Results for last drop-down list, for example slow queries in the last 30 minutes, 7 days, or 30 days.- sort the results by how long each query took to run, by clicking the duration headers,

Sum of durations, Average of durations, Max duration, or Min duration.

A good place to start optimizing MongoDB queries is indexing strategy. Take a look at the Knowledge Base article How to Optimize MongoDB Queries Using find() & Indexes, where we explain the basics and provide simple examples.

If you know you don’t need to return all the documents in a collection, it’s a good idea to limit the number of results using the limit () method in your queries. And, you should only return the fields that you need using projections.

In the Query Profiler, go to the Explain plan tab. If the query has used an index, you will see an Index scan stage, otherwise you will see a Collection scan stage.

Locate the collection in the connection tree, right-click, and select Add Index. If you’re unsure of the collection name, you’ll find it in the Namespace field in the Query Profiler.

Create the index using the Add index dialog, see Adding an index for more information. The Add index dialog is part of the Index Manager.

If the slow running query is already using an index and you want to change the indexed fields, first locate the collection in the connection tree. If you’re unsure of the collection name, you’ll find it in the Namespace field in the Query Profiler. Then under Indexes, double-click the index you want to modify.

Change the fields using the Edit index dialog, see Editing an index for more information. The Edit index dialog is part of the Index Manager. When you change the indexed fields using the Index Manager, Studio 3T drops the index for you and recreates the index. Recreating indexes has an impact on database performance.

In Studio 3T’s Query Profiler, the Explain plan tab provides statistics about the performance of each stage in the query plan and when the query ran. The Explain plan tab also shows you if a query is using an index and how it is using it.

By default, the Query Profiler displays the most recent slow query at the top of the list. You can sort the queries by when they ran by clicking the header on the Most recent occurrence field.

Depending on the timeframe you have selected, when you fix a query you may find it disappears from the Query Profiler. This is expected behavior. When the query runs, its performance has improved beyond the profiling threshold. In other words, it takes less than 100 milliseconds to run.

For replica sets, the profiler works individually for each set member. By default, the primary is selected when you open the Query Profiler. To profile secondaries, select each set member in turn using the connection drop-down list and use the Profiler switch to enable the MongoDB Database Profiler for that set member.

Explore more about optimizing MongoDB queries

Interested in learning more about optimizing MongoDB queries? Check out these related knowledge base articles:

- How to speed up MongoDB queries with Studio 3T’s Performance Suite for tips on improving MongoDB query performance

- MongoDB Indexes | A Complete Guide and Tutorial to boost MongoDB performance by creating indexes with Studio 3T’s Index Manager

- Visual Explain | MongoDB Explain, Visualized to help you understand the execution plan and analyze query performance

Alternatively, you can check out MongoDB performance tips and best practices on the MongoDB website:

The tutorial in this article was originally written by Phil Factor.

Phil Factor (real name withheld to protect the guilty), aka Database Mole, has 30 years of experience with database-intensive applications. Despite having once been shouted at by a furious Bill Gates at an exhibition in the early 1980s, he has remained resolutely anonymous throughout his career.